6 Bayesian Spatial Modelling for Areal Data in Stan

6.1 Introduction

6.1.2 Learning outcomes

Today, you will learn how to apply spatial Bayesian models for risk assessments for areal-level discrete outcomes. This is a powerful tool used often in many applications e.g., spatial epidemiology, disaster risk reduction and environmental criminology and many more.

This exercise will focus on casualty data resulting from road accidents by car users in the UK. Road traffic accidents & injuries are a serious problem worldwide. Here, we will estimate the area-specific relative risks (RR) of casualties due to road accidents in local authority areas across England; and we will quantify the levels of uncertainty using a device called exceedance probabilities.

You will learn how to:

- Implement the Spatial intrinsic conditional autoregressive model (ICAR) to areal data in RStan;

- How to use the ICAR model to predict the area-specific relative risks (RR) for areal units and how to determine whether the levels of such risks are statistically significant or not through the 95% credible intervals (95% CrI);

- How to determine the Exceedance Probability i.e., the probability that an area has an excess risk that exceeds a given risk threshold (e.g., RR > 1 (null value));

You can follow the live walkthrough demonstration of the practical and follow the instructions in this recording at your own.

6.1.5 Loading packages

We will need to install the following new packages:

spdep: grants access to functionas(, 'Spatial)` function to coerce non-spatial objects into a spatial object.tmap: grants access to GIS functions. However, we will need to use the previous version i.e.,tmap 3.3-4instead oftmap 4.

install.packages("spdep")

install.packages("remotes")

remotes::install_version("tmap", version = "3.3-4")Now, lets load all packages need specifically for this computer practical:

# Load the packages with library()

library("sf")

library("tmap")

library("spdep")

library("rstan")

library("geostan")

library("SpatialEpi")

library("tidybayes")

library("tidyverse")Upon loading the rstan package, we highly recommend using this code to configure it with RStudio:

This tells RStudio to use the multiple cores in your computer for parallel processing whenever Stan is being implemented. Every time you want to use Stan - make sure to load parallel::detectCores() and rstan_options code.

6.1.6 Datasets & setting up the work directory

Go to your folder CPD-course and create a sub folder called “Day 5”. Here, we will store all our R & Stan scripts and data files. Set your work directory to the Day 5 folder.

For Windows, the code for setting the work directory will be:

For MAC, the code for setting the work directory will be:

The dataset for this practical are:

Road Casualties Data 2015-2020.csvEngland Local Authority Shapefile.shpEngland Regions Shapefile.shp

The dataset were going to start of with is the Road Casualties Data 2015-2020.csv. This data file contains the following information:

- It contains the 307 local authority areas that operate in England. The names and codes are defined under the columns

LAD21NMandLAD21CDrespectively; - It contains the following variables:

Population,CasualtiesandIMDScore. TheCasualtiesis the dependent variable, andIMDScoreis the independent variable. We will needPopulationcolumn to derive the expected number of road casualties to be used as an offset in the Bayesian model.

The shapefile England Local Authority Shapefile.shp contains the boundaries for all 307 local authorities in England. The England Regions Shapefile.shp contains the boundaries for all 10 regions that make up England.

Let us load these dataset to memory:

6.2 Data preparation in RStudio

6.2.1 Calculation for expected numbers

In order to estimate the risk of casualties due to road accidents at an LA-level in England, we will need to first obtain a column that contains estimates from expected number of road casualties. This is derived from the Population column which as denominators or reference population size which is multiplied to the overall incidence rates of road accidents to get the number of expected casualties for each LA area.

You can use the expected() function to compute this column into the road_accident data frame

# calculate the expected number of cases

road_accidents$ExpectedNum <- round(expected(population = road_accidents$Population, cases = road_accidents$Casualties, n.strata = 1), 0)This particular column ExpectedNum is important, it must be computed and used as an offset in our spatial model.

6.2.2 Converting the spatial adjacency matrix to nodes & edges

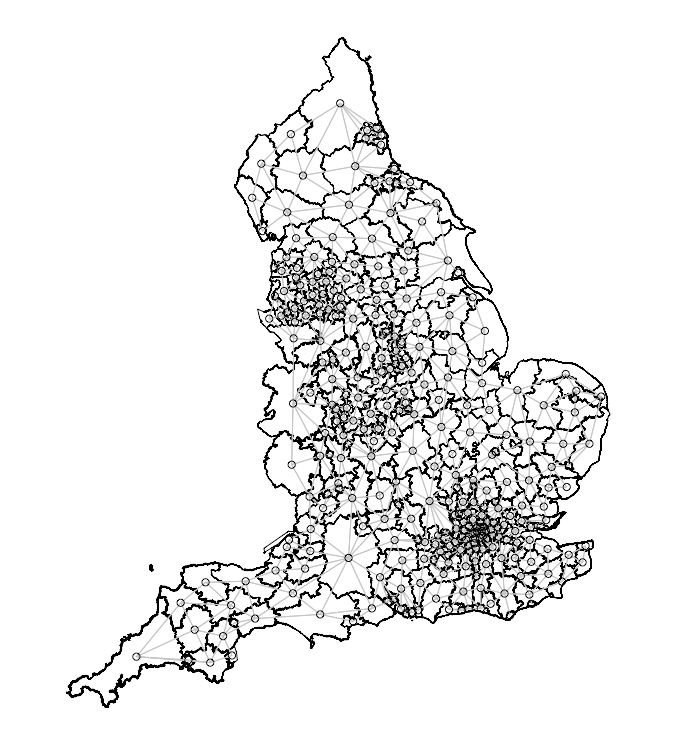

We will need to transform the image below into a list of nodes and edges accordingly as Stan can only identify adjacency with a set of paired nodes with edges that connect them. For instance, node1 is the index region and node2 is the list of neighbouring regions connected to the index region in node1

We can perform this by first merging in the road accident data to the LA-level shapefile. Once this action is completed, we will then need to coerce the spatial object to be from a simple features (i.e., sf) object to the spatial object (i.e., sp).

Here is the code:

# merge the attribute table to the shapefile

spatial.data <- merge(england_LA_shp, road_accidents, by.x = c("LAD21CD", "LAD21NM"), by.y = c("LAD21CD", "LAD21NM"), all.x = TRUE)

# reordering the columns

spatial.data <- spatial.data[, c(3,1,2,4,5,7,6)]

# need to be coerced into a spatial object

sp.object <- as(spatial.data, "Spatial")Now, we are going to need the nodes and edges from the sp.object using the shape2mat() function - this changes it into a matrix object. From the matrix object, we will be able to prepare the data from spatial ICAR model using the prep_icar_data() function. Here, is the code:

# needs to be coerced into a matrix object

adjacencyMatrix <- shape2mat(sp.object)

# we extract the components for the ICAR model

extractComponents <- prep_icar_data(adjacencyMatrix)From the extractComponents object, we will need to extract the following contents:

$group_sizethis is the number of areal units under observation listed in the shapefile (should be the same in the road accidents dataset)$node1are index regions of interest$node2are the other neighbouring regions that are connected to the index region of interest listed innode1$n_edgescreates the network as show area is connected to what neighbourhood. It’s still an adjacency matrix using the queen contiguity matrix but as a network.

Here is the code for performing the extraction:

n <- as.numeric(extractComponents$group_size)

nod1 <- extractComponents$node1

nod2 <- extractComponents$node2

n_edges <- as.numeric(extractComponents$n_edges)Note that the information needed are stored in n, nod1, nod2 and n_edges.

6.2.3 Create the dataset to be compiled in Stan

For the list step in the data preparation, we need to define the variables needed to be compiled in Stan. The outcome Casualties, independent variable IMDScore and offset variable ExpectedNum needs to be extracted into separate vectors. The data needs to be aligned with the areas in shapefile as the result will be churned to that order. So make sure the data is already linked in to the geometries!

Here is the code:

Now, we create our dataset for Stan:

# put all components into a list object

stan.spatial.dataset <- list(N=n, N_edges=n_edges, node1=nod1, node2=nod2, Y=y, X=x, Off_set=e)The above information is going to be passed to Stan in the data block. Now, we are in the position to develop our spatial intrinsic conditional autoregressive (ICAR) model. Now open your Stan script and we begin.

6.3 Creating the script for the Spatial ICAR model

6.3.1 Data block

In the data block, we specify the following:

- The total number of areal unit observations

Nas an integer (i.e.,307), this corresponds ton; - The total number edges

N_edgesas an integer (i.e.,823), this corresponds ton_edges; - The

nodes1based on the size ofN_edges(i.e.,823) must be specified as an array to connect withnodes2, this corresponds tonod1; - The

nodes2based on the size ofN_edges(i.e.,823) must be specified as an array to connect withnodes1, this corresponds tonod2; - We define our

Youtcome (i.e., road accidents) as anarrayof sizeN(i.e.,307) which is aninteger, this corresponds toy; - We define our independent variables

Xas avectorof sizeN(i.e.,307), this corresponds tox; - We must define our offset for the expected numbers

Off_setas a vector of sizeN(i.e.,307), this corresponds toe;

Here is what our modeldata block` would look like:

data {

int<lower=0> N; // number of spatial units or neighbourhoods

int<lower=0> N_edges; // number of edges connecting adjacent areas using Queens contiguity

array[N_edges] int<lower=1, upper=N> node1; // list of index areas showing which spatial units are neighbours

array[N_edges] int<lower=1, upper=N> node2; // list of neighbouring areas showing the connection to index spatial unit

array[N] int<lower=0> Y; // dependent variable

vector<lower=0>[N] X; // Single independent variable

vector<lower=0>[N] Off_set; // offset variable

}6.3.2 Transformed data block

We are going to include a transformed data block. Here, we are simply changing the expected numbers by taking its log() and creating another vector called log_offset. This will be added to the poisson_log() sampling statement in our likelihood function of the spatial model to account for the reference population in England.

Here, we specify it as follows:

data {

int<lower=0> N; // number of spatial units or neighbourhoods

int<lower=0> N_edges; // number of edges connecting adjacent areas using Queens contiguity

array[N_edges] int<lower=1, upper=N> node1; // list of index areas showing which spatial units are neighbours

array[N_edges] int<lower=1, upper=N> node2; // list of neighbouring areas showing the connection to index spatial unit

array[N] int<lower=0> Y; // dependent variable

vector<lower=0>[N] X; // Single independent variable

vector<lower=0>[N] Off_set; // offset variable

}

transformed data {

vector[N] log_Offset = log(Off_set); // use the expected cases as an offset and add to the regression model

}6.3.3 Parameters block

For the parameters block, we will need to specify the following:

- The global intercept i.e.,

alphafor the entire study area (i.e., average risk of road accidents on a population-level); - The coefficient

betafor our independent variableXwhich is theIMDScore; - We also specify

sigmaas arealvalue which is some error or standard deviation that is multiplied to the combined effects of our structured and unstructured random effects; - We define the structured spatial random effects

phito be vector of sizeN; - We define the unstructured spatial random effects

thetato be vector of sizeN; - We define

rhoas the proportion of the variation coming from the structured spatial random effects;

We add the parameters block as follows:

data {

int<lower=0> N; // number of spatial units or neighbourhoods

int<lower=0> N_edges; // number of edges connecting adjacent areas using Queens contiguity

array[N_edges] int<lower=1, upper=N> node1; // list of index areas showing which spatial units are neighbours

array[N_edges] int<lower=1, upper=N> node2; // list of neighbouring areas showing the connection to index spatial unit

array[N] int<lower=0> Y; // dependent variable

vector<lower=0>[N] X; // Single independent variable

vector<lower=0>[N] Off_set; // offset variable

}

transformed data {

vector[N] log_Offset = log(Off_set); // use the expected cases as an offset and add to the regression model

}

parameters {

real alpha; // intercept

real beta; // covariates

real<lower=0> sigma; // overall standard deviation

real<lower=0, upper=1> rho; // proportion unstructured vs. spatially structured variance

vector[N] theta; // unstructured random effects

vector[N] phi; // structured spatial random effects

}6.3.4 Transformed parameters block

Here, we calculate the combined random effects from the structured and unstructured component for our model:

data {

int<lower=0> N; // number of spatial units or neighbourhoods

int<lower=0> N_edges; // number of edges connecting adjacent areas using Queens contiguity

array[N_edges] int<lower=1, upper=N> node1; // list of index areas showing which spatial units are neighbours

array[N_edges] int<lower=1, upper=N> node2; // list of neighbouring areas showing the connection to index spatial unit

array[N] int<lower=0> Y; // dependent variable

vector<lower=0>[N] X; // Single independent variable

vector<lower=0>[N] Off_set; // offset variable

}

transformed data {

vector[N] log_Offset = log(Off_set); // use the expected cases as an offset and add to the regression model

}

parameters {

real alpha; // intercept

real beta; // covariates

real<lower=0> sigma; // overall standard deviation

real<lower=0, upper=1> rho; // proportion unstructured vs. spatially structured variance

vector[N] theta; // unstructured random effects

vector[N] phi; // structured spatial random effects

}

transformed parameters {

vector[N] combined; // values derived from adding the unstructure and structured effect of each area

combined = sqrt(1 - rho) * theta + sqrt(rho) * phi; // formulation for the combined random effect

}6.3.5 Model block

We build our likelihood function and specify the priors for each parameter under the model block. We are using a typical Poisson model with a log link function as we are assuming there’s some linear relationship between the road accident counts and IMD score, but here we are also taking into account the combined spatial and non-spatial random effects:

data {

int<lower=0> N; // number of spatial units or neighbourhoods

int<lower=0> N_edges; // number of edges connecting adjacent areas using Queens contiguity

array[N_edges] int<lower=1, upper=N> node1; // list of index areas showing which spatial units are neighbours

array[N_edges] int<lower=1, upper=N> node2; // list of neighbouring areas showing the connection to index spatial unit

array[N] int<lower=0> Y; // dependent variable

vector<lower=0>[N] X; // Single independent variable

vector<lower=0>[N] Off_set; // offset variable

}

transformed data {

vector[N] log_Offset = log(Off_set); // use the expected cases as an offset and add to the regression model

}

parameters {

real alpha; // intercept

real beta; // covariates

real<lower=0> sigma; // overall standard deviation

real<lower=0, upper=1> rho; // proportion unstructured vs. spatially structured variance

vector[N] theta; // unstructured random effects

vector[N] phi; // structured spatial random effects

}

transformed parameters {

vector[N] combined; // values derived from adding the unstructure and structured effect of each area

combined = sqrt(1 - rho) * theta + sqrt(rho) * phi; // formulation for the combined random effect

}

model {

Y ~ poisson_log(log_Offset + alpha + X * beta + combined * sigma); // likelihood function: multivariable Poisson ICAR regression model

// setting priors

alpha ~ normal(0.0, 1.0); // prior for alpha: weakly informative

beta ~ normal(0.0, 1.0); // prior for betas: weakly informative

theta ~ normal(0.0, 1.0); // prior for theta: weakly informative

sigma ~ normal(0.0, 1.0); // prior for sigma: weakly informative

rho ~ beta(0.5, 0.5); // prior for rho

target += -0.5 * dot_self(phi[node1] - phi[node2]); // calculates the spatial weights

sum(phi) ~ normal(0, 0.001 * N); // priors for phi

}6.3.6 Generated quantities block

Lastly, we instruct Stan on the parameters we want to report. We want them as relative risk ratio (RR). We can use the generated quantities block to obtain these estimates by exponentiation of the ICAR regression model:

data {

int<lower=0> N; // number of spatial units or neighbourhoods

int<lower=0> N_edges; // number of edges connecting adjacent areas using Queens contiguity

array[N_edges] int<lower=1, upper=N> node1; // list of index areas showing which spatial units are neighbours

array[N_edges] int<lower=1, upper=N> node2; // list of neighbouring areas showing the connection to index spatial unit

array[N] int<lower=0> Y; // dependent variable

vector<lower=0>[N] X; // Single independent variable

vector<lower=0>[N] Off_set; // offset variable

}

transformed data {

vector[N] log_Offset = log(Off_set); // use the expected cases as an offset and add to the regression model

}

parameters {

real alpha; // intercept

real beta; // covariates

real<lower=0> sigma; // overall standard deviation

real<lower=0, upper=1> rho; // proportion unstructured vs. spatially structured variance

vector[N] theta; // unstructured random effects

vector[N] phi; // structured spatial random effects

}

transformed parameters {

vector[N] combined; // values derived from adding the unstructure and structured effect of each area

combined = sqrt(1 - rho) * theta + sqrt(rho) * phi; // formulation for the combined random effect

}

model {

Y ~ poisson_log(log_Offset + alpha + X * beta + combined * sigma); // likelihood function: multivariable Poisson ICAR regression model

// setting priors

alpha ~ normal(0.0, 1.0); // prior for alpha: weakly informative

beta ~ normal(0.0, 1.0); // prior for betas: weakly informative

theta ~ normal(0.0, 1.0); // prior for theta: weakly informative

sigma ~ normal(0.0, 1.0); // prior for sigma: weakly informative

rho ~ beta(0.5, 0.5); // prior for rho

target += -0.5 * dot_self(phi[node1] - phi[node2]); // calculates the spatial weights

sum(phi) ~ normal(0, 0.001 * N); // priors for phi

}

generated quantities {

vector[N] eta = alpha + X * beta + combined * sigma; // compute eta and exponentiate into mu

vector[N] rr_mu = exp(eta); // output the neighbourhood-specific relative risks in mu

real rr_beta = exp(beta); // output the risk ratios for each coefficient

real rr_alpha = exp(alpha); // output the risk ratios for the intercept

}Well done! You have coded your first spatial risk model. Alright, let us save the script as icar_poisson_model.stan. We can now compile and run it through RStudio to get our posterior estimates as risk ratios (RR) for each areas. We can also get the exceedance probabilities. The next steps are easy from this point onwards.

6.4 Compiling Stan code for the Spatial ICAR risk modelling

6.4.1 Printing of the global results

Now, let us turn our attention to RStudio. Using the stan() to compile the saved script to obtain the posterior estimation of the parameters from our model:

# Start the clock

ptm <- proc.time()

icar_poisson_fit = stan("icar_poisson_model.stan", data=stan.spatial.dataset, iter=20000, control = list(max_treedepth = 12), chains=6, verbose = FALSE)

# Stop the clock

proc.time() - ptmWe can see our estimated results for alpha, beta and sigma:

# remove that annoying scientific notation

options(scipen = 999)

summary(icar_poisson_fit, pars=c("alpha", "beta", "sigma"), probs=c(0.025, 0.975))$summaryOutput from summary()$summary function:

mean se_mean sd 2.5% 97.5% n_eff Rhat

alpha 0.162965832 0.00052694848 0.084071562 -0.001856892 0.32898381 25454.344 1.000094

beta -0.009775948 0.00002545072 0.004085887 -0.017779845 -0.00177424 25773.456 1.000076

sigma 0.627153399 0.00078449468 0.052970350 0.538581209 0.74606811 4559.169 1.001467Here is the interpretation:

alphais the global mean (or average) in the population under study. It means on average the road accident occurrence in England for the period 2015 to 2020 is 0.1629 (95% CrI: -0.00185 to 0.3289). If we take the exponent of this value i.e.,exp(0.162965832)- we get the relative risks of road accidents which is 1.17 times higher in England (95% CrI: 0.998 to 1.38). The result is not significant as the null value of 1 exists between its lower and upper limits.betais the coefficient forIMDScore. This means that its yields a decrease on average for road accidents throughout England for more deprived areas -0.0097 (95% CI: -0.0177 to -0.00177424). This is negligible negative relationship that is statistically significant; while it is significant, if we exponentiate these values its really close to the null value (1), so we can rule out this relationship!sigmais the overall standard deviation or global error.

Note, we can view the spatial effects i.e., phi for each area using this code:

# show first 6 rows only instead of the full 307

summary(icar_poisson_fit, pars=c("phi"), probs=c(0.025, 0.975))$summaryAlternatively, you can use the print() function to get a detailed output:

# print full table to avoid some rows from being omitted.

options(max.print = 100000)

# print the results

print(icar_poisson_fit, pars=c("alpha", "beta", "rr_alpha", "rr_beta", "rr_mu", "sigma"), probs=c(0.025, 0.975))Output from print() function:

Inference for Stan model: anon_model.

6 chains, each with iter=20000; warmup=10000; thin=1;

post-warmup draws per chain=10000, total post-warmup draws=60000.

mean se_mean sd 2.5% 97.5% n_eff Rhat

alpha 0.16 0 0.08 0.00 0.33 25454 1

beta -0.01 0 0.00 -0.02 0.00 25773 1

sigma 0.63 0 0.05 0.54 0.75 4559 1

Samples were drawn using NUTS(diag_e) at Fri Mar 8 07:41:39 2024.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).6.4.2 Rapid diagnostics of the rHATs

Before mapping the relative risks, we must check if the any of the estimates i.e., alpha, beta, sigma and all phi exceed the rHAT value of 1.05. This is an indication that the iterations did not perform well if an rHAT for a parameter is above 1.05. We can do a rapid checks to see which parameter is valid or not by creating a binary variable of 1’s (Valid) and 0’s (Not valid). We can tabulate it to see the numbers:

# diagnostic check on the rHats - put everything into a data frame

diagnostic.checks <- as.data.frame(summary(icar_poisson_fit, pars=c("alpha", "beta", "rr_alpha", "rr_beta", "rr_mu", "sigma", "phi", "lp__"), probs=c(0.025, 0.5, 0.975))$summary)

# create binary variable

diagnostic.checks$valid <- ifelse(diagnostic.checks$Rhat < 1.05, 1, 0)

# tabulate it

table(diagnostic.checks$valid)Everything is okay - all outputted parameters have an rHAT < 1.05. We are free to generate our maps.

NOTES: To avoid such complications, it is always to best to run about 10000, 15000 or more iterations. Usually, shorter iterations yield low effective sample sizes after thinning/warm-up samples are discarded, which in turn, may lead to complications that may cause the rHAT to be above 1.05.

6.4.3 Extraction of the area-specific relative risks

If you run the following code:

# show first 6 rows only instead of the full 307

head(summary(icar_poisson_fit, pars=c("rr_mu"), probs=c(0.025, 0.975))$summary)We see the relative risk (RR) estimates for the first areas under the column mu with their corresponding credibility limits under the 2.5% and 97.5% column. We are going to extract this information into a data frame and applying the cleaning and renaming of columns accordingly:

# extraction key posterior results for the generated quantities

relativeRisk.results <- as.data.frame(summary(icar_poisson_fit, pars=c("rr_mu"), probs=c(0.025, 0.975))$summary)

# now cleaning up this table up

# first, insert clean row numbers to new data frame

row.names(relativeRisk.results) <- 1:nrow(relativeRisk.results)

# second, rearrange the columns into order

relativeRisk.results <- relativeRisk.results[, c(1,4,5,7)]

# third, rename the columns appropriately

colnames(relativeRisk.results)[1] <- "rr"

colnames(relativeRisk.results)[2] <- "rrlower"

colnames(relativeRisk.results)[3] <- "rrupper"

colnames(relativeRisk.results)[4] <- "rHAT"

# view clean table

head(relativeRisk.results)See clean table:

rr rrlower rrupper rHAT valid

1 0.6295692 0.5759348 0.6859993 0.9999354 1

2 0.8043656 0.7550300 0.8555160 0.9999809 1

3 0.6518326 0.6068501 0.6991145 0.9999186 1

4 0.6292392 0.5920648 0.6674334 0.9999475 1

5 0.8885551 0.8284516 0.9505547 1.0000141 1

6 0.7971718 0.7451160 0.8513495 0.9999757 1Insert these columns into the spatial.data object as follow:

# now, we proceed to generate our risk maps

# align the results to the areas in shapefile

spatial.data$rr <- relativeRisk.results[, "rr"]

spatial.data$rrlower <- relativeRisk.results[, "rrlower"]

spatial.data$rrupper <- relativeRisk.results[, "rrupper"]These relative will allow us to see the mapped risks of road accidents across local authorities in England. We also want a supporting map indicate whether the risks are significant or not. Here, we create an extra column in the spatial.data called Significance.

# create categories to define if an area has significant increase or decrease in risk, or nothing all

spatial.data$Significance <- NA

spatial.data$Significance[spatial.data$rrlower<1 & spatial.data$rrupper>1] <- 0 # NOT SIGNIFICANT

spatial.data$Significance[spatial.data$rrlower==1 | spatial.data$rrupper==1] <- 0 # NOT SIGNIFICANT

spatial.data$Significance[spatial.data$rrlower>1 & spatial.data$rrupper>1] <- 1 # SIGNIFICANT INCREASE

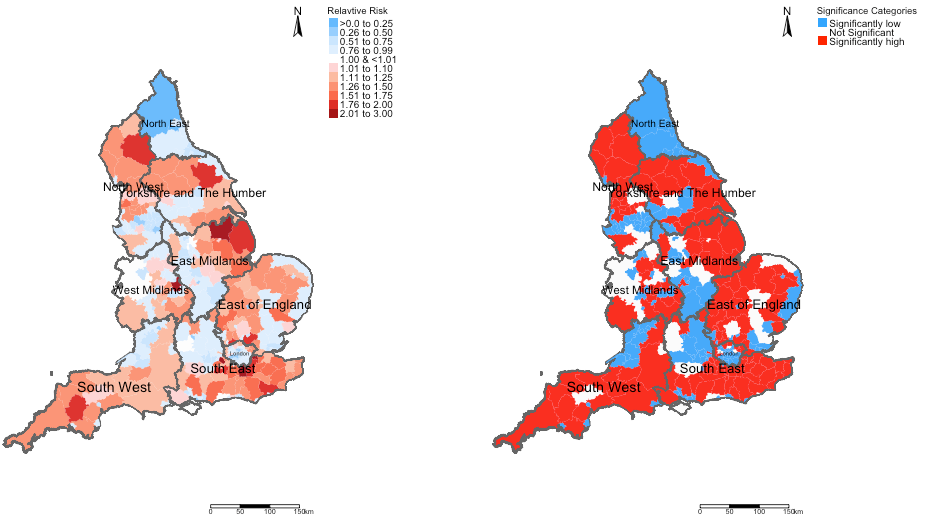

spatial.data$Significance[spatial.data$rrlower<1 & spatial.data$rrupper<1] <- -1 # SIGNIFICANT DECREASE6.4.4 Mapping of RR and significance

The next set of codes are all cosmetics for the creating our risk map for road accidents. Here is the code:

# For map design for the relative risk -- you want to understand or get a handle on what the distribution for risks look like

# this would inform you of how to create the labelling for the legends when make a map in tmap

summary(spatial.data$rr)

hist(spatial.data$rr)

# creating the labels

RiskCategorylist <- c(">0.0 to 0.25", "0.26 to 0.50", "0.51 to 0.75", "0.76 to 0.99", "1.00 & <1.01",

"1.01 to 1.10", "1.11 to 1.25", "1.26 to 1.50", "1.51 to 1.75", "1.76 to 2.00", "2.01 to 3.00")

# next, we are creating the discrete colour changes for my legends and want to use a divergent colour scheme

# scheme ranges from extreme dark blues to light blues to white to light reds to extreme dark reds

# you can pick your own colour choices by checking out this link [https://colorbrewer2.org]

RRPalette <- c("#65bafe","#98cffe","#cbe6fe","#dfeffe","white","#fed5d5","#fcbba1","#fc9272","#fb6a4a","#de2d26","#a50f15")

# categorising the risk values to match the labelling in RiskCategorylist object

spatial.data$RelativeRiskCat <- NA

spatial.data$RelativeRiskCat[spatial.data$rr>= 0 & spatial.data$rr <= 0.25] <- -4

spatial.data$RelativeRiskCat[spatial.data$rr> 0.25 & spatial.data$rr <= 0.50] <- -3

spatial.data$RelativeRiskCat[spatial.data$rr> 0.50 & spatial.data$rr <= 0.75] <- -2

spatial.data$RelativeRiskCat[spatial.data$rr> 0.75 & spatial.data$rr < 1] <- -1

spatial.data$RelativeRiskCat[spatial.data$rr>= 1.00 & spatial.data$rr < 1.01] <- 0

spatial.data$RelativeRiskCat[spatial.data$rr>= 1.01 & spatial.data$rr <= 1.10] <- 1

spatial.data$RelativeRiskCat[spatial.data$rr> 1.10 & spatial.data$rr <= 1.25] <- 2

spatial.data$RelativeRiskCat[spatial.data$rr> 1.25 & spatial.data$rr <= 1.50] <- 3

spatial.data$RelativeRiskCat[spatial.data$rr> 1.50 & spatial.data$rr <= 1.75] <- 4

spatial.data$RelativeRiskCat[spatial.data$rr> 1.75 & spatial.data$rr <= 2.00] <- 5

spatial.data$RelativeRiskCat[spatial.data$rr> 2.00 & spatial.data$rr <= 10] <- 6

# check to see if legend scheme is balanced - if a number is missing that categorisation is wrong!

table(spatial.data$RelativeRiskCat)Generating the maps as a paneled output:

# map of relative risk

rr_map <- tm_shape(spatial.data) +

tm_fill("RelativeRiskCat", style = "cat", title = "Relavtive Risk", palette = RRPalette, labels = RiskCategorylist) +

tm_shape(england_Region_shp) + tm_polygons(alpha = 0.05) + tm_text("name", size = "AREA") +

tm_layout(frame = FALSE, legend.outside = TRUE, legend.title.size = 0.8, legend.text.size = 0.7) +

tm_compass(position = c("right", "top")) + tm_scale_bar(position = c("right", "bottom"))

# map of significance regions

sg_map <- tm_shape(spatial.data) +

tm_fill("Significance", style = "cat", title = "Significance Categories",

palette = c("#33a6fe", "white", "#fe0000"), labels = c("Significantly low", "Not Significant", "Significantly high")) +

tm_shape(england_Region_shp) + tm_polygons(alpha = 0.10) + tm_text("name", size = "AREA") +

tm_layout(frame = FALSE, legend.outside = TRUE, legend.title.size = 0.8, legend.text.size = 0.7) +

tm_compass(position = c("right", "top")) + tm_scale_bar(position = c("right", "bottom"))

# create side-by-side plot

tmap_arrange(rr_map, sg_map, ncol = 2, nrow = 1)Output:

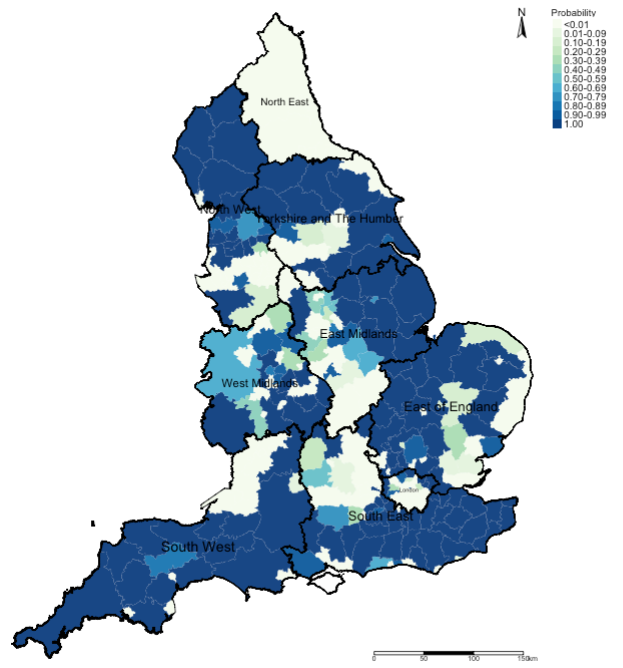

6.4.5 Extracting and mapping of the exceedance probabilities

Exceedance probabilities allows the user to quantify the levels of uncertainty surrounding the risks we quantified. We can use a threshold for instance an RR > 1 and ask what is the probability that an area has an excess risk of road accidents and visualise this as well.

Just like the RRs, we are going to extract this information into a vector and include it into our spatial.data object. For this extraction, we will need to use functions from the tidybayes and tidyverse packages i.e., spread_draws(), group_by(), summarise() and pull():

# extract the exceedence probabilities from the icar_possion_fit object

# compute the probability that an area has a relative risk ratio > 1.0

threshold <- function(x){mean(x > 1.00)}

excProbrr <- icar_poisson_fit %>% spread_draws(rr_mu[i]) %>%

group_by(i) %>% summarise(rr_mu=threshold(rr_mu)) %>%

pull(rr_mu)

# insert the exceedance values into the spatial data frame

spatial.data$excProb <- excProbrrThe next set of codes are all cosmetics for the creating our probability exceedance map for road accidents. Here is the code:

# create the labels for the probabilities

ProbCategorylist <- c("<0.01", "0.01-0.09", "0.10-0.19", "0.20-0.29", "0.30-0.39", "0.40-0.49","0.50-0.59", "0.60-0.69", "0.70-0.79", "0.80-0.89", "0.90-0.99", "1.00")

# categorising the probabilities in bands of 10s

spatial.data$ProbCat <- NA

spatial.data$ProbCat[spatial.data$excProb>=0 & spatial.data$excProb< 0.01] <- 1

spatial.data$ProbCat[spatial.data$excProb>=0.01 & spatial.data$excProb< 0.10] <- 2

spatial.data$ProbCat[spatial.data$excProb>=0.10 & spatial.data$excProb< 0.20] <- 3

spatial.data$ProbCat[spatial.data$excProb>=0.20 & spatial.data$excProb< 0.30] <- 4

spatial.data$ProbCat[spatial.data$excProb>=0.30 & spatial.data$excProb< 0.40] <- 5

spatial.data$ProbCat[spatial.data$excProb>=0.40 & spatial.data$excProb< 0.50] <- 6

spatial.data$ProbCat[spatial.data$excProb>=0.50 & spatial.data$excProb< 0.60] <- 7

spatial.data$ProbCat[spatial.data$excProb>=0.60 & spatial.data$excProb< 0.70] <- 8

spatial.data$ProbCat[spatial.data$excProb>=0.70 & spatial.data$excProb< 0.80] <- 9

spatial.data$ProbCat[spatial.data$excProb>=0.80 & spatial.data$excProb< 0.90] <- 10

spatial.data$ProbCat[spatial.data$excProb>=0.90 & spatial.data$excProb< 1.00] <- 11

spatial.data$ProbCat[spatial.data$excProb == 1.00] <- 12

# check to see if legend scheme is balanced

table(spatial.data$ProbCat)Generating the probability map output:

# map of exceedance probabilities

tm_shape(spatial.data) +

tm_fill("ProbCat", style = "cat", title = "Probability", palette = "GnBu", labels = ProbCategorylist) +

tm_shape(england_Region_shp) + tm_polygons(alpha = 0.05, border.col = "black") + tm_text("name", size = "AREA") +

tm_layout(frame = FALSE, legend.outside = TRUE, legend.title.size = 0.8, legend.text.size = 0.7) +

tm_compass(position = c("right", "top")) + tm_scale_bar(position = c("right", "bottom"))Output:

Example Interpretation: We can see that the risk patterns for road accidents across England is quite heterogeneous. While it is quite pronounced in all 10 regions in England, the burden is quite significant in South West region with large numbers of local authorities having an increased risk which are statistically significant. While, there’s significant limitation the models used here - perhaps, the Department for Transport should do an investigation on these patterns starting with the South West area.

6.5 Task

Try your hand on this problem in Stan: Build a spatial ICAR model using data on counts of low birth weights in Georgia US to create the following maps:

- Map showing the relative risk of low birth weight across the 163 counties in Georgia

- Map showing the statistical significance of the relative risk

- Map showing the Exceedance Probabilities using the threshold of RR > 1

Use the following dataset:

Low_birth_weights_data.csv: ContainsNAME,Lowbirths(Counts) andExpectedNumber

Georgia_Shapefile.shp: ContainsNAME